import torch

import time

# 检查是否有可用的 GPU

def check_gpu():

if torch.cuda.is_available():

print("GPU is available! Running test computation...")

device = torch.device("cuda")

else:

print("No GPU found. Using CPU instead.")

device = torch.device("cpu")

# 多次执行计算以确保 GPU 运行

for i in range(100):

start_time = time.time()

a = torch.randn(1000, 1000, device=device)

b = torch.randn(1000, 1000, device=device)

c = torch.matmul(a, b) # 进行矩阵乘法运算

torch.cuda.synchronize() # 确保 GPU 计算完成

end_time = time.time()

# 确保运算发生在 GPU 上

if c.device.type == "cuda":

print(f"Iteration {i+1}: Computation successfully performed on GPU in {end_time - start_time:.4f} seconds!")

else:

print(f"Iteration {i+1}: Computation is not on GPU. Something went wrong!")

if __name__ == "__main__":

check_gpu()

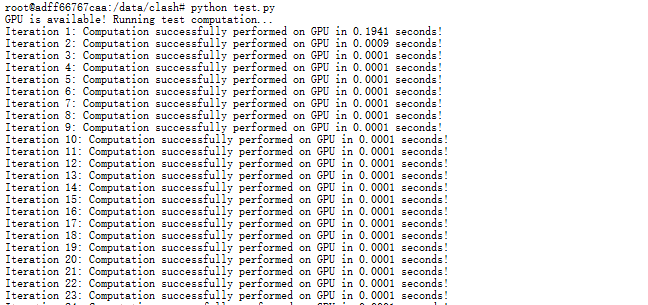

测试图: